Like Atlantis, Video pinball is another game where DQN and its derivatives do very well compared to humans, scoring 10s of times higher than us. I looked at the game and trained an agent using Nathan Sprague’s code, which you can get from https://github.com/spragunr/deep_q_rl, to see what was happening.

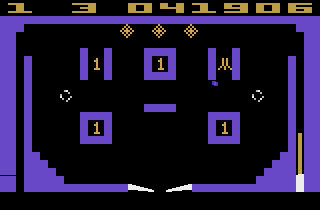

In summary: patience was what was happening. The secret to the game, or at least how DQN manages to score highly, is by very frequent and well timed bumping, putting and keeping the ball in a vertical bounce pattern across one of the two point-gathering zone that you can see surrounded by the vertical bars at the left and right near the top of the screen, like this:

It’s not easy to tell but there’s a lot of nudging of the ball going on while it’s in flight.

Here’s the usual learning graph:

On the left is the average score per epoch, on the right the maximum score per epoch. For reference, in the DeepMind papers, they tend to list human scores of around 20,000. As noted at the bottom top human scores are actually far above this.

The progress video is not very interesting. The untrained network doesn’t know how to launch the ball, so there’s nothing to see. It then learns to launch the ball and perform almost constant but undirected bumps, which get it to around 20,000 points (around “human level”), before discovering its trick, after which the scores take off. There’s probably more noticeable progress along the line related to recovery techniques in the cases where the ball escapes, but it doesn’t feel worth trawling through hours of video to discover them.

In summary, like in the case of Atlantis, I think this game is “solved”, and it shouldn’t be used for algorithm comparison in a quantitative way. It could just be a binary “does it discover the vertical trapping technique?” like Atlantis is about “does it discover that shooting those low fast-flying planes is all important?” and that’s all. The differences in score are probably more to do with the random seed used during the test run.

Unlike in Atlantis, these scores aren’t actually superhuman. TwinGalaxies reports a top score of around 38 million. You can watch this run in its full 5-hour glory here: http://www.twingalaxies.com/showthread.php/173072. This is still far, far above whatever DQN gets.

I’ve uploaded the best-performing network to http://organicrobot.com/deepqrl/video_pinball_20170129_e41_doubleq_dc48a1bab611c32fa7c78f098554f3b83fb5bb86.pkl.gz. You’ll need to get Theano from around January 2017 to run it (say commit 51ac3abd047e5ee4630fa6749f499064266ee164) since I trained this back then and they’ve changed their format since then. I think I used the double Q algorithm, but it doesn’t make much difference whether you use that or the standard DQN I imagine.