While DeepMind’s papers show their algorithm doing better than some random person on a game they don’t play much while having to use a keyboard when playing for five minutes (“expert human player” scoring 31 on breakout, c’mon), they don’t try to show their programs getting better scores than human experts at their own games. I am not really sure why they chose their 5-minute limit for reporting, but the rest of us don’t have to abide by it, and in here we can show that if you remove the 5-minute limit, the algorithm can beat the best human scores in at least one game.

One of the games that stands near the top of the DeepQ / human ratio in their papers is Atlantis. It’s a game where planes fly overhead and you shoot them down from one of three guns. Most planes that fly past are harmless and are only there to score points from. Every now and then a plane firing a laser down comes across and if you fail to shoot it down it will destroy one of your guns or one of your other “buildings”. When all of your buildings are destroyed, the game is over. One unusual feature of the game is that your buildings and guns reappear if you score enough points.

It would be very easy to write a program to play this game almost perfectly if you could hand-code it. The sprites are pretty much always the same, the planes always appear at the same height and the game never changes. You could write an if-detect-plane-here-then-shoot program and it would do amazingly well. It’s a lot harder if you are not hand-coding almost anything like DeepQ does.

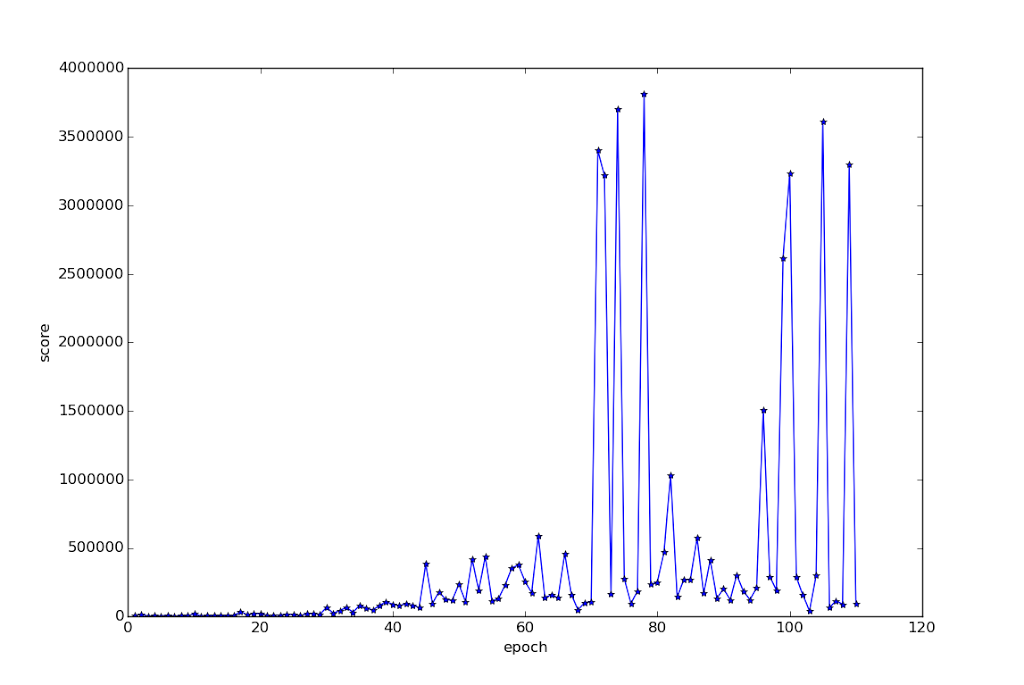

DeepQ learns to play this game relatively quickly. There is constant feedback from shooting down planes, and because of the loss-of-life marker, the program gets instant feedback when one of its buildings or guns is destroyed. Here’s the training graph for it.

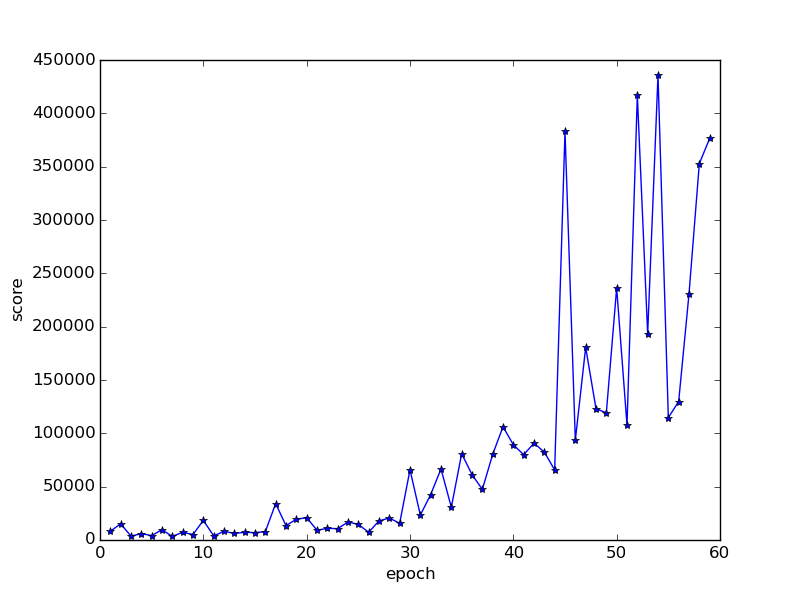

And here’s the first 60 epochs or so since the latter epochs are hiding the early landscape:

As you can see, the learning is quite gradual until the huge spikes. There is even quite a bit of improvement before epoch 30 that is being hidden in the zoomed in image, although given the constant feedback I’d have expected it to have improved even faster. The scores are very high from epoch 40 onwards. For reference the score quoted on DeepMind’s Nature paper ( http://www.nature.com/nature/journal/v518/n7540/full/nature14236.html ) are around 30000 for humans and 85000 for DQN.

The spikes on the first graph are huge, but they turn out to be artificially LOW because of the limit of playing time imposed on the testing period during training (125,000 actions, which is roughly two hours 20 minutes of play). I grabbed the model that produced the highest one, on epoch 78, and set it to play with unlimited time. After over 20 hours of gameplay, with the emulator running very slowly (bug yet to be investigated), and me doubting it was ever going to finish the game, I killed the run.

I’ve put the first 9 and a quarter hours of the game on Youtube:

It scores around 15 million points during the video, and I think over 30 million during the run (it isn’t easy to check since the points clock over every million so you have to find all the clocking overs during the run). For comparison, the highest score recorded in TwinGalaxies is around 6.6 million for someone using a real Atari, and around 10 million for someone using an emulator.

If you watch the game even for a little bit you can tell that the computer doesn’t play very well at all. It constantly misses on most of its shots. What it does super-humanly though, is kill the laser-shooting planes, and since that’s the only way you can die, it can play for a very long time.

For those of you who want to try this out, I used Nathan Sprague’s brilliant reimplementation of DeepMind’s DeepQ algorithm which you can get at https://github.com/spragunr/deep_q_rl. I ran it with the “Nature”-sized model. You can also download the pre-trained model from http://organicrobot.com/deepqrl/atlantis_20151122_e78_94f7d724f69291ecfe23ac656aef38c63a776b8d.pkl.gz